I wrote an article about macros, variables and templating that I do recommend you to read here. Since its addition to Apache foundation in 2015, Airflow has seen great adoption by the community for designing and orchestrating ETL pipelines and ML workflows.

#Dag airflow code

Create a Python file in your folder dags/ and paste the code below: from airflow import DAG Let’s say you want to get the price of specific stock market symbols such as APPL (Apple), FB (Meta), and GOOGL (Google). That means the DAG must appear in globals(). You must know that Airflow loads any DAG object it can import from a DAG file. Ok, now let me show you the easiest way to generate your DAGs dynamically.

#Dag airflow series

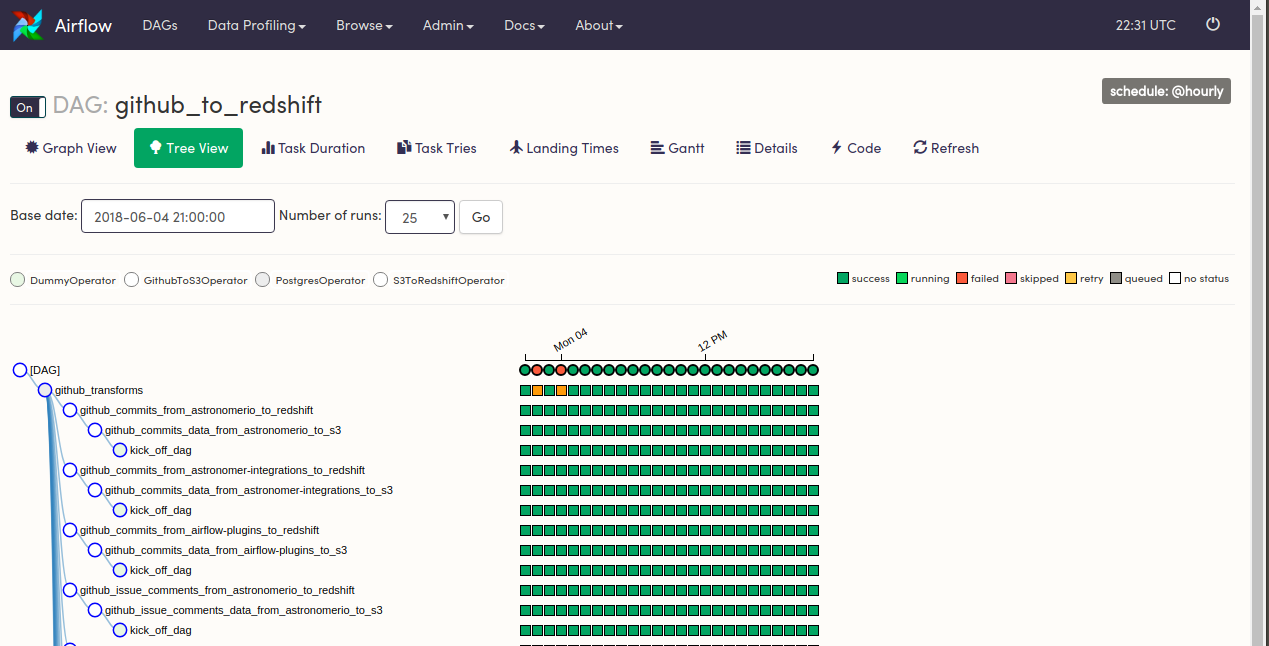

Notice that an AIP Dynamic Task Mapping is coming soon. An Airflow DAG defined with a startdate, possibly an enddate, and a non-dataset schedule, defines a series of intervals which the scheduler turns into individual DAG runs and executes. Apache Airflow needs to know what your DAG (and so the tasks) will look like to render it. Today, it’s not possible (yet) to do that. If you are using the convenient way of sending email using the emailonfailure DAG argument, checking whether the field is present will be helpful. Because of its dynamic nature and flexibility, Apache Airflow has benefited many businesses today. Apache Airflow’s rich web interface allows you to easily monitor pipeline run results and debug any failures that occur. The latter is when you make tasks based on the output of previous tasks. If you share your Airflow environment with multiple teams, testing whether there are owners defined in the DAG definition is a good practice. Data Pipelines, denoted as DAG in Airflow, are essential for creating flexible workflows. The former is when you create DAGs based on static, predefined, already known values (configuration files, environments, etc.).

#Dag airflow how to

Thanks to that, it’s pretty easy to generate DAGs dynamically.īefore I show you how to do it, it’s important to clarify one thing.ĭynamic DAGs are NOT dynamic tasks. The beauty of Airflow is that everything is in Python, which brings the powerfulness and flexibility of this language. 🤩 The confusion with Airflow Dynamic DAGs Guess what? That’s what dynamic DAGs solve. if you move from a legacy system to Apache Airflow, porting your DAGs may be a nightmare without dynamic DAGs.

#Dag airflow update

it’s harder to maintain as each time something change, you will need to update all of your DAGs one by one.you waste your time (and your time is precious).The bottom line is that you don’t want to create the same DAG, the same tasks repeatedly with just slight modifications. destination table (could be a different table for each API route, folder etc)Īlso, you could have different settings for each of your environments: dev, staging, and prod.staticstics (could be mean, median, standard deviation, all of them or only one of those).source (could be a different FTP server, API route etc.).Next, let’s create a DAG which will call our sub dag. Check out the dagid in step 2 Call the Sub-DAG. Airflow Sub DAG id needs to be in the following format parentdagid.childdagid. Airflow Sub DAG has been implemented as a function. Now, let’s say this DAG has different configuration settings. Airflow Sub DAG is in a separate file in the same directory. Set *.service.type=LoadBalancer to choose this approach.Very simple DAG.

Load balancer IP address: This exposes the service(s) externally using a cloud provider's load balancer. Set *.service.type=NodePort to choose this approach. This approach makes the corresponding service(s) reachable from outside the cluster by requesting the static port using the node's IP address, such as NODE-IP:NODE-PORT. Node port: This exposes the service() on each node's IP address at a static port (the NodePort). Set *.service.type=ClusterIP to choose this approach. This approach makes the corresponding service(s) reachable only from within the cluster. Set *.ingress.enabled=true to expose the corresponding service(s) through Ingress.Ĭluster IP address: This exposes the service(s) on a cluster-internal IP address. Ingress: This requires an Ingress controller to be installed in the Kubernetes cluster. The service(s) created by the deployment can be exposed within or outside the cluster using any of the following approaches: RabbitMQ packaged by VMware - Expose services

0 kommentar(er)

0 kommentar(er)